Mojo -- More of a Protocol

Mojo Overview

No one knows the Mojo better than its designer and thus the best introduction is Intro to Mojo & Services. Here I provide a brief overview for the Mojo.

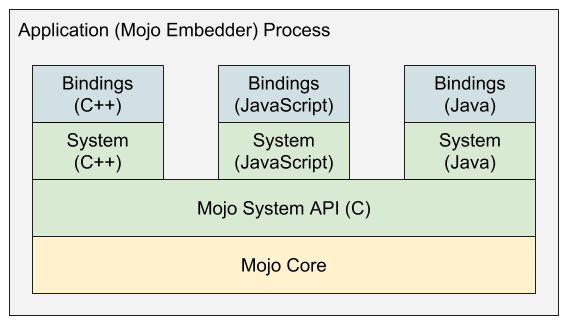

Mojo is an Inter-Process Communication (IPC) framework. Before Mojo comes out, Chromium also had an IPC framework implemented in C++ macros, called legacy IPC. To reduce redundant code and decomposing services, Chromium refactors the underlying implementation of legacy IPC and creates Mojo. Currently, most IPC in Chromium is implemented with Mojo and the underlying implementation of legacy IPC has been replaced with Mojo. Below is a figure from Mojo documents showing different layers of Mojo.

Mojo core is responsible for the really core implementation of IPC. It focuses on building the IPC network and routing IPC messages to the right place. Mojo System API is a simple wrapper for Mojo core while the real platform-specific logic, like Windows named pipes and Unix sockets, is implemented in C++ System in the figure above. The C++ bindings is usually what Chromium really relies on and provides most high-level abstractions.

How Mojo works

The way Mojo works is pretty similar to protobuf. Firstly, the user defines various interfaces in a mojom file, each of which represents a function which can be called by a remote endpoint. During the process of compiling, the mojom files will be compiled to different bindings. For example, for C++ code, a example.mojom file will generate a example.mojom.h file which other source files can include.

The implementation of Mojo is really simple and straightforward. Enter mojo/public/tools/bindings/generators/cpp_templates directory and all secrets are revealed.

Yes, Mojo creates a set of source templates for different language bindings. After parsing mojom file, it simply renders the templates with data retrieved from AST. Thus, a good way to set instrumentation is to modify *.tmpl files.

Mojo network

A Mojo network is made up of many Nodes, which means a process running Mojo. It works pretty like a TCP/IP network but one key difference is that there is one broker process in a Mojo network. The broker process (the browser process in Chromium) is usually a full-privileged process and responsible for assisting communication between nodes.

Next I will take the Chromium for an example to introduce some details in Mojo.

Join a network

The very first thing for a new Mojo node is to join the Mojo network. In Chromium case, every child process is spawned from the browser process (the broker in Mojo) and a bootstrap channel will be created in advance. To establish the connection with new child process, the browser process has to pass the handle of the bootstrap channel to it. The methods vary on different platforms.

1 | // child_thread_impl.cc:193 |

From the code snippet above, it is clear that the initial channel handle is retrieved from command line arguments on Windows and Fuchsia. On macOS, it is passed through Mach. On Linux, since the child process is forked from its parent process, the handle is simply retrieved from global descriptors.

Once the child process has been launched, the browser process sends an invitation to the child process.

1 | // child_process_launch_helper.cc:168 |

After the child process accepts the invitation, the platform channel is established. Then, the browser process sends a BrokerClient message to inform the child process the name of the broker process. Note that the inviter process may be not the broker process in other cases. After receiving the message, the child process updates the broker name and peers the inviter with previous bootstrap channel reused.

1 | void NodeController::OnAcceptBrokerClient(const ports::NodeName& from_node, |

At this time, the child process has succeeded in joining the Mojo network.

Mojo Protocol

As the title suggests, Mojo is more of a protocol. IPC is not something new, but what makes Mojo innovative is that it defines an IPC protocol capable of exchanging information and resources across the process boundary.

Message header

The first layer of a Mojo IPC message is Message. Below is the definition of its header.

1 | // channel.h:61 |

To illustrate it clearly, below is an ASCII figure.

1 | |<-----16bits------>|<------16bits----->| - |

Note that only on Windows and macOS, handles are serialized into the extra headers.

ChannelMessage header

The second layer is ChannelMessage, which is encoded in the payload field of Message.

1 | // node_channel.cc:27 |

And corresponding ASCII figure.

1 | |<-----16bits------>|<------16bits----->| |

Note that the data field in ChannelMessage is not fixed-length. It depends on the type of ChannelMessage.

EventMessage header

One of ChannelMessage types is EVENT_MESSAGE. In fact, EventMessage is a large class of messages. The definition of its header is as follows.

1 | // event.h:30 |

1 | // event.cc:23 |

and corresponding ASCII figure.

1 | |<-----16bits------>|<------16bits----->| - |

Specifically, for a UserMessageEvent:

1 | |<-----16bits------>|<------16bits----->| - |

UserMessage header

As a type of EventMessage, UserMessage also has its header. Contrary to previous design, the UserMessage is attached after the whole EventMessage.

1 |

|

and corresponding ASCII figure.

1 | |<-----16bits------>|<------16bits----->| |

For every dispatcher, a DispatcherHeader and DispatcherData will be appended to MessageHeader.

Summary

In my view, Mojo is more like a protocol, not a framework. The good message layering design decomposes different functions and extends the flexibility of Mojo itself.