Cast a Closure to a Function Pointer -- How libffi closure works

Callbacks

This post also has a Chinese version: 把闭包变成函数指针——libffi 闭包原理解析.

As is often the case, a lib written in C may expose an API which accepts a function pointer as a callback function like:

1 | typedef void (*callback_t)(void* data); |

And we could call it like:

1 | typedef struct Context { |

But still it’s inconvenient since we have to care about the lifetime of the data pointer manually which may cause memory leak.

Closures

If you are familiar with C++, it won’t take too much time to wrap it with std::function like:

1 | typedef std::function<void()> callback_fn; |

Then we could rewrite previous code in a little more modern and safe way:

1 | typedef struct Context { |

Now we could pass a std::function, in other words, a closure with such a simple wrapper and enjoy various modern C++ utilities.

A closure typically consists of a pointer to the function and the corresponding context (captured variables etc.) which the parameter callback and data in the original function signature is exactly for.

Raw Function Pointer

But wait, not all callbacks API is designed like this. For example:

1 | void register_callback_bad(callback_bad_t callback); |

It only accepts a raw function pointer, which means we can’t pass a closure to it since there is no place for the context. Similarly, C++ won’t allow you to convert a lambda to a raw function pointer if it captures any variable.

So here comes the challenge: Could we pass a closure as a raw function pointer? It sounds impossible but I find libffi achieves it indeed:

After calling

ffi_prep_closure_loc, you can castcodelocto the appropriate pointer-to-function type.

Let’s see the tricks behind libffi.

libffi Implementation

Idea

As explained just now, a raw function pointer is just an address and doesn’t carry any callback-related context at runtime. Thus, the only solution is to allocate a static memory and access it within the callback function. However, we still need something to distinguish between callbacks and you may already guess it. Yes, the address of the function pointer itself can work as the unique identification, though such usage is quite uncommon.

Basically, the idea of the libffi is to use extra memory to store data for each closure but the design is pretty interesting.

Trampoline

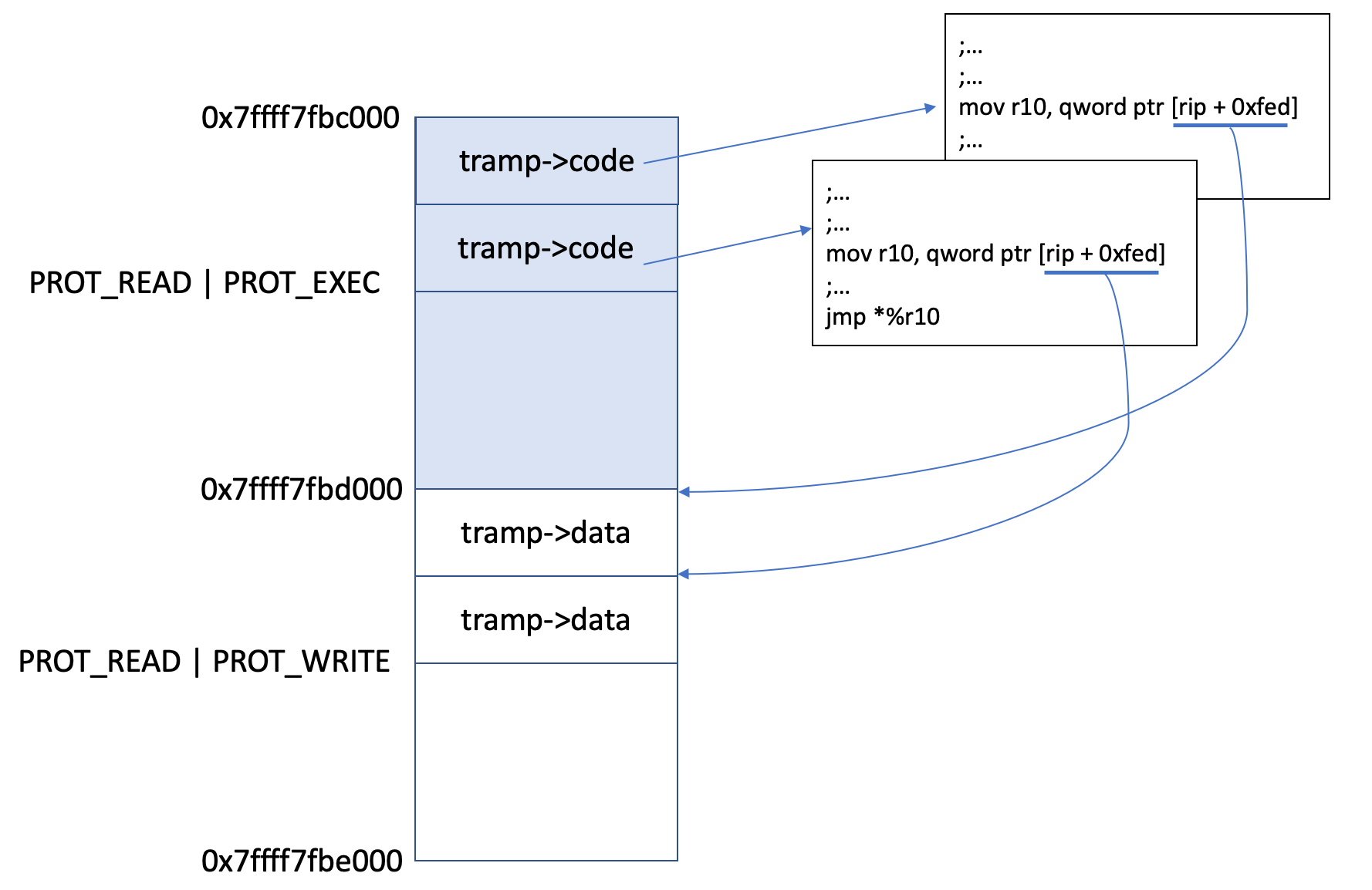

The core concept of the implementation is called trampoline. Each trampoline consists of two important fields: code and data. The code is the entry point of the registered callback. It would have multiple copies in the memory to have different addresses. The data is the context data related to a callback. Below is the code snippet of the trampoline:

1 | #ifdef ENDBR_PRESENT |

So the secret is that: the offset between the code and data of a trampoline is fixed! Therefore, when a function pointer can be used as a callback, it is also the address (after plus the fix offset) of the callback data. After preparing both the real callback address and the callback data, it jumps to some other wrapper code by jmp *%r10.

Another question is that: how is the offset calculated? To keep the fixed offset between code and data, libffi allocates two continuous 4k (UNIX64_TRAMP_MAP_SIZE) memory regions. So the offset is 4k minus the total length of instructions from beginning to the current one. Assume we are in linux 86_64 with endbr64 available, we could calculate the X86_DATA_OFFSET as:

1 | X86_DATA_OFFSET |

And that’s exactly the offset defined in the code snippet above.

Further Details

An interesting thing is how libffi finds the trampoline table:

1 | static int |

libffi reads all its mappings, locates the text segment and caculates the offset of the trampoline table. Note that tramp_globals.text is equal to &trampoline_code_table. Then, it maps the trampoline table by mmap.

1 | static int |

Note that the trampoline code is repeated multiple times by .rept to fulfill the 4k memory.

Summary

To summarize, see figure below:

Update(08/01/2021): The Trampoline mechanism also eliminates the need for Writable/Executable memory since the content of the tramp->code is generated at compile time.

My Implementation

To check whether my understanding is correct, I also write a demo. Check it at Github.

The core library is less than 100 lines and should be easy to understand compared to libffi. If you feel it helpful, don’t hesitate to give it a star.